sam brown, explodingdog

Some examples are:

Some of these mental states represent or are about things. They present or concern the possibility of those things being one way rather than another. For instance, suppose someone believes that Queens is in Connecticut. This belief is about Queens, and it's about Queens being in Connecticut, rather than in some other place. I wish I could dance like Fred Astaire. This wish is about me, and about Fred Astaire, and its about me dancing like him, rather than dancing some other way.

Mental states of this sort are known as intentional states. (This is a technical philosopher's use of the word "intentional." It doesn't mean that the states in question were ones you intended or decided to have.)

There's an important difference between having thoughts or beliefs about some object, and standing in other sorts of relations to that object. For instance, pretend the Superman story is true. Then we can say that Lois believes that the super-hero who defends Metropolis is strong. But she does not believe that the mild-mannered reporter at the Daily Planet is strong. Even though the super-hero who defends Metropolis is the same person as the mild-mannered reporter at the Daily Planet. Contrast other, non-mental, relations Lois might stand in to Superman. If she kisses the super-hero, and the super-hero is the same person as the reporter, then it follows that she kissed the reporter, too. If she kicks the reporter in the knee, and the super-hero and the reporter are the same person, then it follows that she kicked the super-hero in the knee, too. But beliefs aren't like that. If she believes the super-hero is strong, it doesn't follow that she believes the reporter is strong; even if they're the same person. If she wants to marry the super-hero, it doesn't follow that she wants to marry the reporter. And so on. That's why you can't use Leibniz's Law with properties having to do with what people belive, doubt, know, wish for, and so on.

Another unique feature of intentional states is that they can be about things which don't exist. For instance, a child may believe there is a troll hiding under her bed, even though no trolls ever have or ever will exist. But nobody can kiss a troll, or kick a troll, unless there really are trolls around to kick.

We sometimes ascribe intentional states to computers and other man-made devices. For instance, you might say that your chess-playing computer wants to castle king-side. But your chess-playing computer probably does not have any original intentionality. It is too simple a device. Such intentionality as it has it gets from the intentions and beliefs of its programmers. (Or perhaps we're just "reading" intentionality into the program, in the way we read emotions into baby dolls and stuffed animals.)

However, the causal theorist about the mind believes that if we have a computer running a sophisticated enough program, then the computer will have its own, original intentional states. This is the view Searle wants to argue against.

Functionalism says any way of implementing the program gives you everything that's needed for there to be a mind, real beliefs, understanding, intelligence, and so on. Well, here's one way that the program could be implemented:

sam brown, explodingdog

Jack does not understand any Chinese. However, he inhabits a room which contains a book with detailed instructions about how to manipulate Chinese symbols. He does not know what the symbols mean, but he can distinguish them by their shape. If you pass a series of Chinese symbols into the room, Jack will manipulate them according to the instructions in the book, writing down some notes on scratch paper, and eventually will pass back a different set of Chinese symbols. This results in what appears to be an intelligible conversation in Chinese. (In fact, we can suppose that "the room" containing Jack and the book of instructions passes a Turing Test for understanding Chinese.)According to Searle, Jack does not understand Chinese, even though he is manipulating symbols according to the rules in the book. So manipulating symbols according to those rules is not enough, by itself, to enable one to understand Chinese. It would not be enough, by itself, to enable any system implementing those rules to understand Chinese. Some extra ingredient would be needed. And there's nothing special about the mental state of "understanding" here. Searle would say that implementing the Chinese room software does not, by itself, suffice to give a system any intentional states: no genuine beliefs, or desires, or intentions, or hopes or fears, or anything. It does not matter how detailed and sophisticated that software is. Searle writes:

Such intentionality as computers appear to have is solely in the minds of those who program them and those who use them, those who send in the input and those who interpret the output.The aim of the Chinese room example was to try to show this by showing that as soon as we put something into the system that really does have intentionality (a man), and we program him with the formal program, you can see that the formal program carries no additional intentionality. It adds nothing, for example, to a man's ability to understand Chinese. (p. 368)

Searle responds to the Systems Reply as follows:

My response to the systems theory is quite simple: let the individual internalize all of these elements of the system. He memorizes the rules in the ledger and the data banks of Chinese symbols, and he does all the calculations in his head. The individual then incorporates the entire system. There isn't anything at all to the system that he does not encompass. We can even get rid of the room and suppose he works outdoors. All the same, he understands nothing of the Chinese, and a fortiori neither does the system, because there isn't anything in the system that isn't in him. If he doesn't understand, then there is no way that the system could understand because the system is just a part of him. (p. 359)There are several problems with Searle's response.

In the first place, his claim "he understands nothing of the Chinese, and a fortiori neither does the system, because there isn't anything in the system that isn't in him" is a dubious form of inference. This is not a valid inference:

He doesn't weigh five pounds, and a fortiori neither does his heart, because there isn't anything in his heart that isn't in him.Nor is this:

He wasn't designed by the Pentagon, and a fortiori neither was the Chinese room system, because there isn't anything in the system that isn't in him.So why should the inference work any better when we're talking about whether the system understands Chinese?

A second, and related, problem is Searle's focus on the spatial location of the Chinese room system. This misdirects attention from the important facts about the relationship between Jack and the Chinese room system. Let me explain.

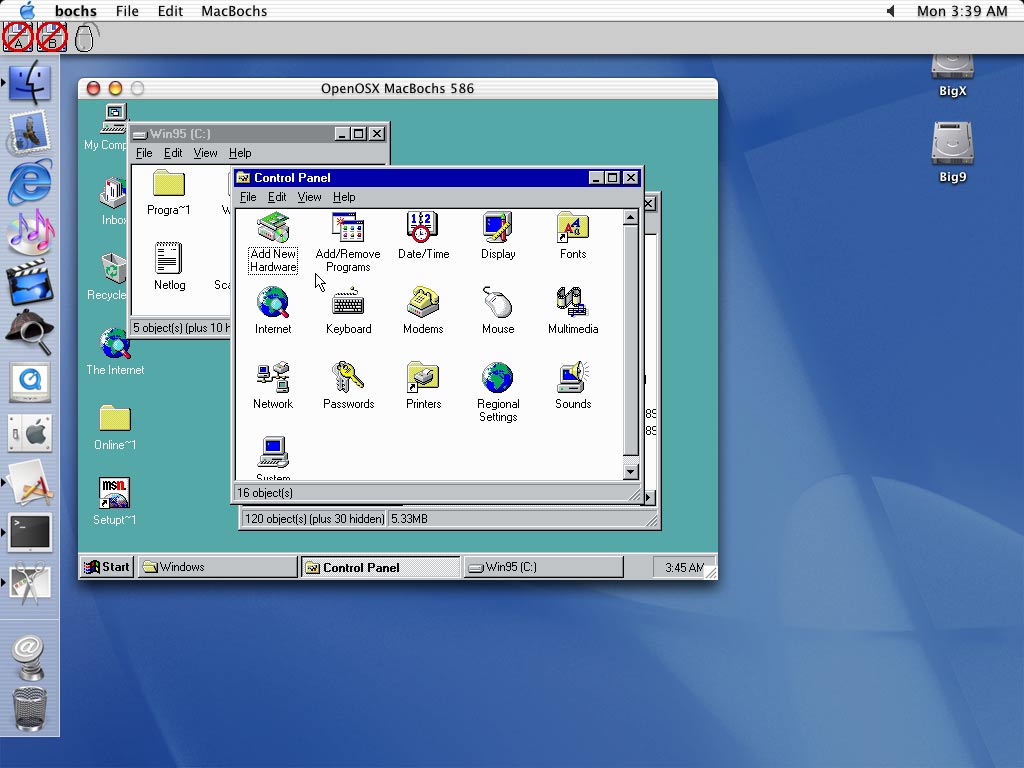

Some computing systems run software that enables them to emulate other operating systems, and software written for those other operating systems. For instance, you can buy software that lets your Macintosh emulate Windows. Suppose you do this. Now consider two groups of software running on your Macintosh: (i) the combination of the Macintosh OS and all the programs it's currently running (including the emulator program), and (ii) the combination of the Windows OS and the activities of some program it's currently running. We can note some important facts about the relationship between these two pieces of software:

Some computing systems run software that enables them to emulate other operating systems, and software written for those other operating systems. For instance, you can buy software that lets your Macintosh emulate Windows. Suppose you do this. Now consider two groups of software running on your Macintosh: (i) the combination of the Macintosh OS and all the programs it's currently running (including the emulator program), and (ii) the combination of the Windows OS and the activities of some program it's currently running. We can note some important facts about the relationship between these two pieces of software:

It's this notion of one piece of software incorporating another piece of software which is important in thinking about the relation between Jack and the Chinese room software. According to the causal theorist about the mind, when Jack memorizes all the instructions in the Chinese book, he becomes like the Mac software, and the Chinese room software becomes like the emulated Windows software. Jack fully incorporates the Chinese room software. That does not mean that Jack shares all the states of the Chinese room software, nor that it shares all of his states. If the Chinese room software crashes, Jack may keep going fine. If the Chinese room software is in a state of believing that China was at its cultural peak during the Han dynasty, that does not mean that Jack is also in that state. And so on. In particular, for the Chinese room software to understand some Chinese symbol, it is not required that Jack also understand that symbol.

The fact that when Jack has "internalized" the Chinese room software, it is then spatially internal to Jack, is irrelevant. This just means that the Chinese room software and Jack's software are being run on the same hardware (Jack's brain). It does not mean that any states of the one are thereby states of the other.

In the causal theorist's view, what goes on when Jack "internalizes" the Chinese room software is this. Jack's body then houses two distinct intelligent systems--similar to people with multiple personalities. The Chinese room system is intelligent. Jack implements its thinking (like the Mac emulation software implements the activities of some Windows software). But Jack does not thereby think the Chinese room system's thoughts, nor need Jack even be aware of those thoughts. Neither of the intelligent systems in Jack's body is able to directly communicate with the other (by "reading each other's mind," or anything like that). And the Chinese room system has the peculiar feature that its continued existence, and the execution of its intentions, depends on the other system's activities and work schedule.

This would be an odd set-up, were it to occur. (Imagine Jack trying to carry on a discussion with the Chinese room software, with the help of a friend who does the translation!) But it's not conceptually incoherent.