Behaviorism

As we mentioned before, the behaviorist says that all there is to having some mental state is being disposed to behave certain ways in response to specific kinds of stimulation.

-

What does this mean, to be disposed to behave a certain way?

First, consider the notion of a counterfactual claim. This is a claim about what would have happened if the world had been different in certain ways. For instance, a true counterfactual claim is:

If I had dropped the chalk out the window, it would have fallen to the ground and shattered.

A false counterfactual claim is:

If I had dropped the chalk out the window, it would have turned into an angel and flown to heaven.

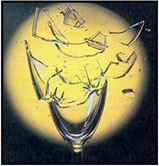

A disposition is a related notion. Dispositions include properties like being crushable, being fragile, and being soluble. Something can be crushable even though it is never crushed. It merely has to be such that it would be crushed if certain sorts of gentle forces were applied to it. Similarly, a glass can be fragile even though it never breaks. It merely has to be such that it would break, if it were struck in certain ways or if it were dropped from a modest height. And a sugar cube which never dissolves in water can still be soluble in water. It merely has to be such that it would dissolve, if it were placed in water. In general, having a disposition does not require that a thing actually undergo any changes; it only requires that the thing would undergo those changes if it were placed in the appropriate circumstances.

A disposition is a related notion. Dispositions include properties like being crushable, being fragile, and being soluble. Something can be crushable even though it is never crushed. It merely has to be such that it would be crushed if certain sorts of gentle forces were applied to it. Similarly, a glass can be fragile even though it never breaks. It merely has to be such that it would break, if it were struck in certain ways or if it were dropped from a modest height. And a sugar cube which never dissolves in water can still be soluble in water. It merely has to be such that it would dissolve, if it were placed in water. In general, having a disposition does not require that a thing actually undergo any changes; it only requires that the thing would undergo those changes if it were placed in the appropriate circumstances.

We call these events of being crushed, breaking, dissolving, and so on, the manifestations of the relevant dispositions. Something can have a disposition even if it never happens to manifest it. Every sugar cube is soluble, but not ever sugar cube dissolves; because some sugar cubes are never placed in water.

Usually, there will be some underlying facts about the object in virtue of which it has the dispositions it has. We call these underlying facts the categorical basis of the disposition. For instance, the sugar cube is disposed to dissolve in water, and the categorical basis of this disposition is a certain kind of molecular and crystalline structure, in virtue of which the sugar will dissolve when placed in water.

A disposition might have different categorical bases in different objects. For instance, fragility has one categorical basis in crystal wine glasses and a different categorical basis in soap bubbles. The underlying properties which make a wine glass likely to break when struck are different from the underlying properties which make a soap bubble liable to break when struck. Houses of cards will have yet further properties. But wine glasses, soap bubbles, and houses of cards still share a single disposition, fragility. They are all disposed to break when struck.

A behaviorist about intelligence says that being intelligent consists in being disposed to give sophisticated, sensible answers to arbitrary questions. Since that's all there is to being intelligent, on this behaviorist's view, and since passing the Turing Test shows that a thing can do this, passing the Turing Test guarantees that the thing in question is genuinely intelligent. The behavior isn't merely a sign or evidence that the thing is intelligent. Rather, behaving in the right ways is all there is to being intelligent.

This kind of behaviorism was one of the first worked-out materialist views about the mind. It's a materialist view in that it doesn't appeal to any souls or independent realm of mental properties; it tries to explain everything in terms of ordinary physical properties, like behavior and dispositions to behave. So if you wanted to reject dualism, you can see why behaviorism might seem initially attractive.

The Causal Theory of Mind

If you find behaviorism hard to believe, you have lots of company.

Behaviorists say that even properties like having a toothache can be defined in terms of how a subject is disposed to behave, in response to specific kinds of stimulation. On their view, all there is to having a toothache is being disposed to moan, hold your cheek, and make appointments at the dentist. This is a very implausible view about toothaches. There seems to be a real difference between having a toothache and acting as if I have a toothache. Certainly when I have a toothache, there's a lot more going on than just that external behavior. There's also something pretty awful going on inside me, which makes me---or causes me---to behave in those ways. If we have to pick something in the behaviorist's picture to be my toothache, it seems like the categorical basis of my behavioral disposition---as it may be, some C-fibers firing in my brain---is a better candidate than the disposition itself. After all, that's what causes me to behave as I do.

Similarly, the behaviorist denies that your belief that Queens is in Connecticut is any inner mental state that causes you to give certain answers to questions like "Where is Queens located?" Rather, being disposed to give those answers is all there is to believing that Queens is in Connecticut. But there seems to be a difference between really thinking that Queens is in Connecticut, and just behaving as though you do. Intuitively, your belief seems to be more like an inner state of your brain that sometimes causes you to behave as you do.

Nowadays most materialists reject behaviorism in favor of more complicated views of the mind. These more complicated views will make the question whether you're intelligent turn not just on how you behave, but also on what's going on inside you. For instance, a clay puppet controlled from Mars, a humanoid robot governed by a sophisticated computer in its head, and a human being might all behave in the same way. But according to these more complicated materialist views, only the robot and the human being have the right kinds of internal structure to count as having thoughts of their own. The clay puppet doesn't. (If you consider the clay puppet together with its Martian operators, then there might be a genuine intelligence on the scene. But for right now, we're just asking about the puppet itself.) So behaving in certain ways does not guarantee that you are intelligent. It merely counts as evidence that you're intelligent.

We'll call these new materialist theories Causal Theories of the Mind. These views are often explained by drawing analogies between the mind and computers, or computer programs. Early versions of these views identified toothaches and beliefs with events going on in our brains. But then it occurred to philosophers that we might want to count some animals or space aliens as having pains and beliefs, too, even if they turned out not to have brains like ours. Maybe they won't have a brain at all, but rather purple goo. If we identified pain with having your C-fibers fire, then nobody who didn't have C-fibers could be in pain. That seems the wrong result.

So Causal Theorists of Mind got more sophisticated. They realized that mental states like pain and belief could be multiply realized. That is, pain might consist in C-fibers firing in our brains, but in purple goo squirting in such-and-such a way in the space alien's head. They said we should think of our brains and the space aliens' purple goo as being two different kinds of computer that are running a common program. And then they proposed that we identify pains, beliefs, and in general, our whole minds, with the software that these computers are running. This sort of view is called Functionalism. It's the view that Armstrong and Lycan are defending in their articles.

Unlike the behaviorists, the Causal Theorists agree that mental states are real inner states that cause our behavior. Behaving in certain ways is not all there is to thinking and being intelligent. It just gives us evidence that someone is thinking and reasoning and so on.

As I said, the most promising versions of the Causal Theory compare the mind to a computer program.

Let's look more closely at the notion of a computer program.

In very abstract terms, a computer program can be understood like this. Any system implementing the program will have a number of different internal states. The program specifies:

- how the system should respond to input

This will typically involve the system's changing its internal state. How the system responds to input may depend on what internal states the system is already in.

- how the system's internal states are causally related to each other, how they're organized internally

- under what conditions the system will give a certain output

For instance, let's design a piece of software to run a rudimentary Coke machine. We suppose that Coke costs 15¢, and that the machine only accepts nickels and dimes.

For instance, let's design a piece of software to run a rudimentary Coke machine. We suppose that Coke costs 15¢, and that the machine only accepts nickels and dimes.

The Coke machine has three states: ZERO, FIVE, TEN. If a nickel is inserted and the machine is in state ZERO, it goes into state FIVE and waits for more input. If a nickel is inserted and the machine is already in state FIVE, it goes into state TEN and waits for more input. If a nickel is inserted and the machine is already in state TEN, then the machine dispenses a Coke and goes into state ZERO. If a dime is inserted and the machine is in state ZERO, it goes into state TEN and waits for more input. If a dime is inserted and the machine is already in state FIVE, then it dispenses a Coke and goes into state ZERO. If a dime is inserted and the machine is already in state TEN, then it dispenses a Coke and goes into state FIVE. (Alternately, if we wanted the machine to give change, it could dispense a Coke and a nickel and go into state ZERO.)

Our Coke machine's behavior can be spelled out in a table, which tells us, for each combination of internal state and input, what new internal state the Coke machine should go into, and what output it should produce:

| Input

| Present State

| Go into this State

| Produce this Output

|

| Nickel | ZERO | FIVE | - |

| FIVE | TEN | -

|

| TEN | ZERO | Coke

|

| Dime | ZERO | TEN | -

|

| FIVE | ZERO | Coke

|

| TEN | FIVE | Coke

|

We call a table of this sort a machine table. Any computer program we know how to write, no matter how sophisticated, can be decomposed into this form (assuming it only needs access to a prespecified, finite amount of memory). You can think of machine tables as a kind of very simple, but mathematically powerful programming language. Just as a single program might be written in any of several different programming languages--C or Python or Java--so too that program could be written out in terms of a machine table.

-

You may see some authors referring to Turing Machines. These aren't real physical machines; they're another sort of mathematical abstraction used in discussing computer software. (They are named after the same Alan Turing who introduced the Turing Test.) We use Turing Machines in the same way we use machine tables. They provide us with mathematically simple and elegant programming languages, which are useful for logical and philosophical discussions, even though it'd be quite inefficient to use them in practice.

Notice that when we described our Coke machine, we didn't say what it was built out of. We just talked about how it works, that is, how its internal states interacted with each other and with input to produce output. Anything which works in the way we specified will suffice. What sort of stuff it's made out of doesn't matter. It could be made out of silicon chips, or LEGOs, or out of little guys performing the instructions specified in the machine table.

Notice that when we described our Coke machine, we didn't say what it was built out of. We just talked about how it works, that is, how its internal states interacted with each other and with input to produce output. Anything which works in the way we specified will suffice. What sort of stuff it's made out of doesn't matter. It could be made out of silicon chips, or LEGOs, or out of little guys performing the instructions specified in the machine table.

In addition, we said nothing about how the internal states of the Coke machine were constructed. Some Coke machines might be in the ZERO state in virtue of having a LEGO gear in a certain position. Other Coke machines might be in the ZERO state in virtue of having a current passing through a certain transistor. We understand what it is for the Coke machine to be in these states in terms of how the states interact with each other and with input to produce output. Any system of states which interact in the way we described counts as an implementation of our ZERO, FIVE, and TEN states.

So our machine tables show us how to understand a system's internal states in such a way that they can be implemented or realized via different physical mechanisms. The internal states described in our machine tables are multiply realizable.

Computers are just very sophisticated versions of our Coke machine. They have a large number of internal states. Programming the computer involves linking these internal states up to each other and to the outside of the machine so that, when you put some input into the machine, the internal states change in predictable ways, and sometimes those changes cause the computer to produce some output (dispense a Coke).

From an engineer's point of view, it can make a big difference how a particular program gets physically implemented. But from the programmer's point of view, the underlying hardware isn't important. Only the software matters. That is, it only matters that there be some hardware with some internal states that causally interact with each other, and with input and output, in the way the software specifies.

The causal theorist or functionalist about the mind thinks that all there is to being intelligent, really having thoughts and other mental states, is implementing some very complicated software. In us, this software is implemented by our brains, but it could also be implemented on other hardware, like a Martian brain or a computer. And if it were, then the Martian brain and the computer would have real thoughts and mental states, too. So long as there's some hardware with some internal states that stand in the right causal relations to each other and to input and output, you've got a mind.

A line of reasoning often offered in support this view is the following:

-

At some level of description, the brain is a device that receives complex inputs from the sensory organs and sends complex outputs to the motor system. The brain's activity is well-behaved enough to be specifiable in terms of various (incredibly complicated) causal relationships, just like our Coke machine. We don't yet know what those causal relationships are. But we know enough to be confident that they exist. So long as they exist, we know that they could be spelled out in some (incredibly complicated) machine table.

- This machine table describes a computer program which as a matter of fact is implemented by your brain. But it could also be implemented by other sorts of hardware. Why should the hardware make any difference to whether any thinking is going on? Wouldn't any other hardware, which did exactly the same job as your brain, in terms of the causal relations between its internal states, sensory inputs, and outputs to the motor system, yield as much mentality as your brain does? Why should the actual physical make-up of your brain be important?

-

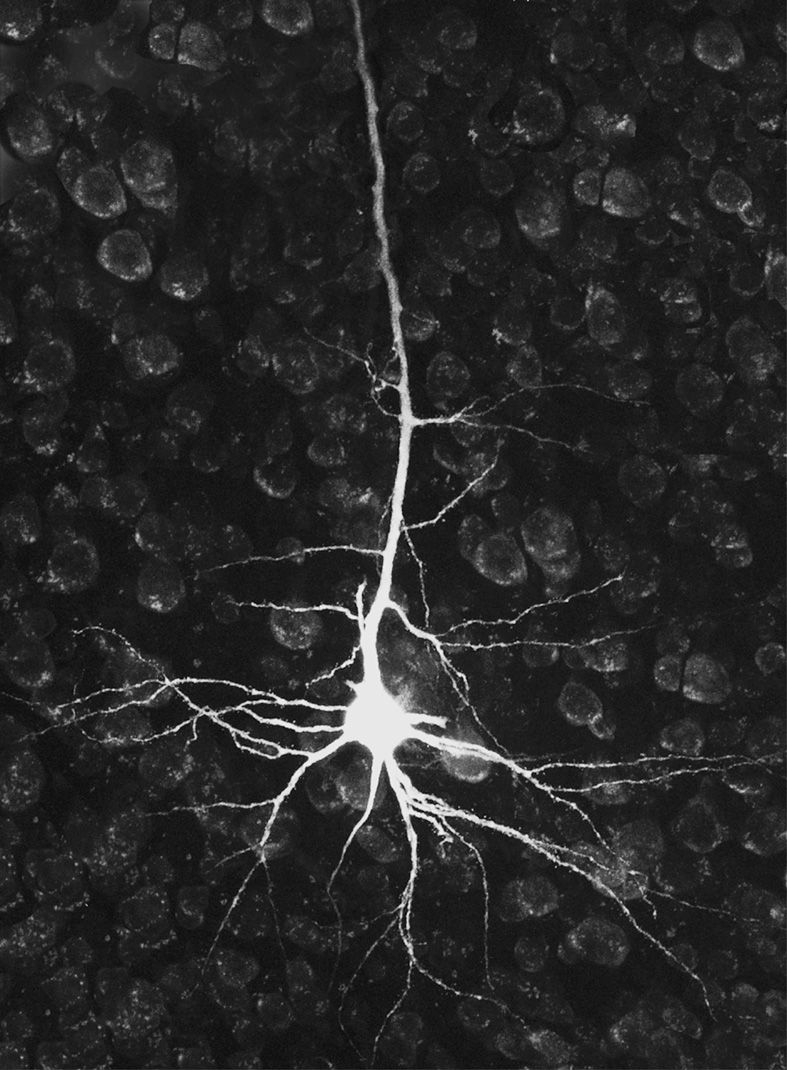

Human neurons are very simple devices. If we replaced just one of your neurons with a tiny computer chip that performed the same job as the neuron it was replacing, doesn't it seem plausible that you would still have a mind, and continue to be capable of the same mental processes as before? You would keep walking and talking, just like before. Your brain would continue to process information just like before. None of your other neurons would even notice the difference! So how could the replacement make any difference to your mental life?

Human neurons are very simple devices. If we replaced just one of your neurons with a tiny computer chip that performed the same job as the neuron it was replacing, doesn't it seem plausible that you would still have a mind, and continue to be capable of the same mental processes as before? You would keep walking and talking, just like before. Your brain would continue to process information just like before. None of your other neurons would even notice the difference! So how could the replacement make any difference to your mental life?

- And if we could replace one neuron with a tiny computer chip, why couldn't we gradually keep replacing your neurons, one by one, until they were all replaced? Now there are no human neurons left; the jobs they were doing are now performed by tiny silicon chips. Over this process of gradual replacement, there doesn't seem to be any point at which you lose your ability to think. You continue walking and talking, just like before. Your "brain" continues to process information in the same way. It's plausible that even you wouldn't be able to tell that any change had taken place.

For these reasons, the causal theorists think that the neurophysiological details of how your mental software is implemented are not important to whether you have real mentality. They think that any hardware which implements that software will also have a mental life, indeed the same mental life you have. Mental states, like the states of our Coke machine, can be implemented on many different sorts of hardware.

A disposition is a related notion. Dispositions include properties like being crushable, being fragile, and being soluble. Something can be crushable even though it is never crushed. It merely has to be such that it would be crushed if certain sorts of gentle forces were applied to it. Similarly, a glass can be fragile even though it never breaks. It merely has to be such that it would break, if it were struck in certain ways or if it were dropped from a modest height. And a sugar cube which never dissolves in water can still be soluble in water. It merely has to be such that it would dissolve, if it were placed in water. In general, having a disposition does not require that a thing actually undergo any changes; it only requires that the thing would undergo those changes if it were placed in the appropriate circumstances.

A disposition is a related notion. Dispositions include properties like being crushable, being fragile, and being soluble. Something can be crushable even though it is never crushed. It merely has to be such that it would be crushed if certain sorts of gentle forces were applied to it. Similarly, a glass can be fragile even though it never breaks. It merely has to be such that it would break, if it were struck in certain ways or if it were dropped from a modest height. And a sugar cube which never dissolves in water can still be soluble in water. It merely has to be such that it would dissolve, if it were placed in water. In general, having a disposition does not require that a thing actually undergo any changes; it only requires that the thing would undergo those changes if it were placed in the appropriate circumstances.